Data Documentation

Data Catalog

Cataloguing data resources is an essential step in the data documentation process. By providing high-level information about a data resource, catalogues help to enhance the findability and reuse of information. The main advantage of using catalogues is that they provide a standardized way of describing and indexing data resources, making it easier for users to locate and utilize the data they need. Additionally, catalogues can improve data quality by providing information about the data source and context, enabling users to understand better the data limitations and potential biases. Therefore, creating and maintaining accurate and up-to-date catalogues is essential to managing data resources effectively.

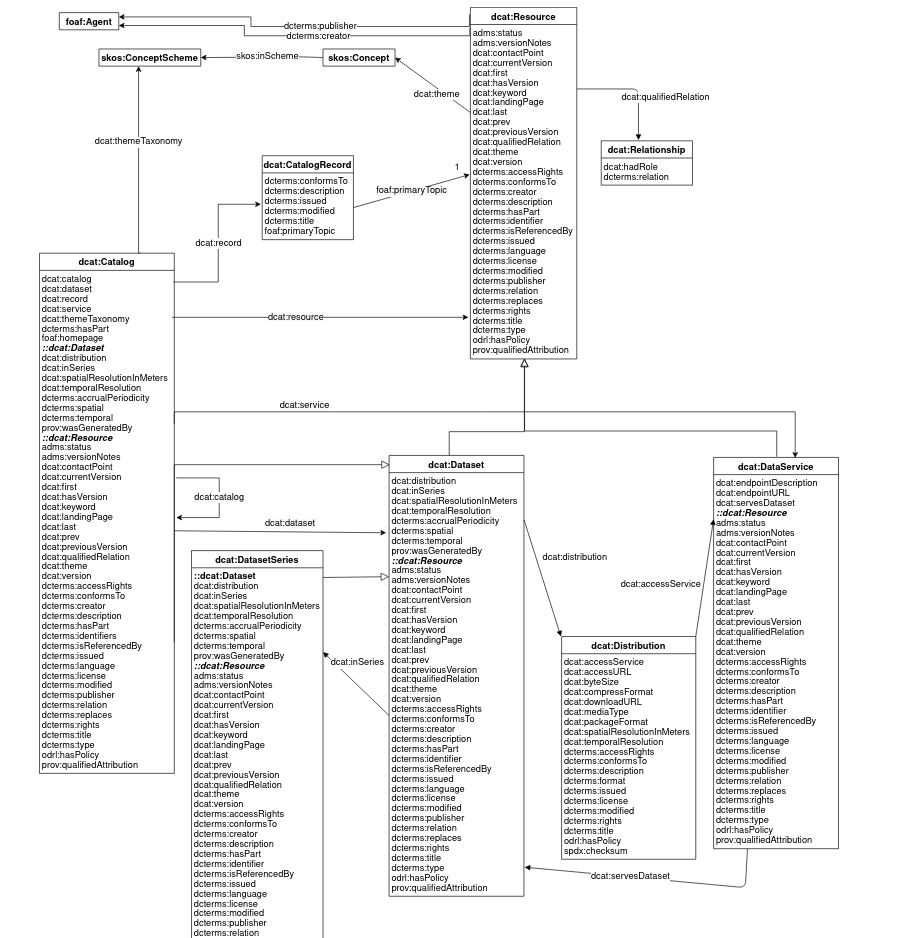

This has been implemented to the VIPCOAT platform using the technology called DCAT version 3 (Data Catalog). The DCAT allows attaching metadata to files as triples. The data model is presented in Figure below.

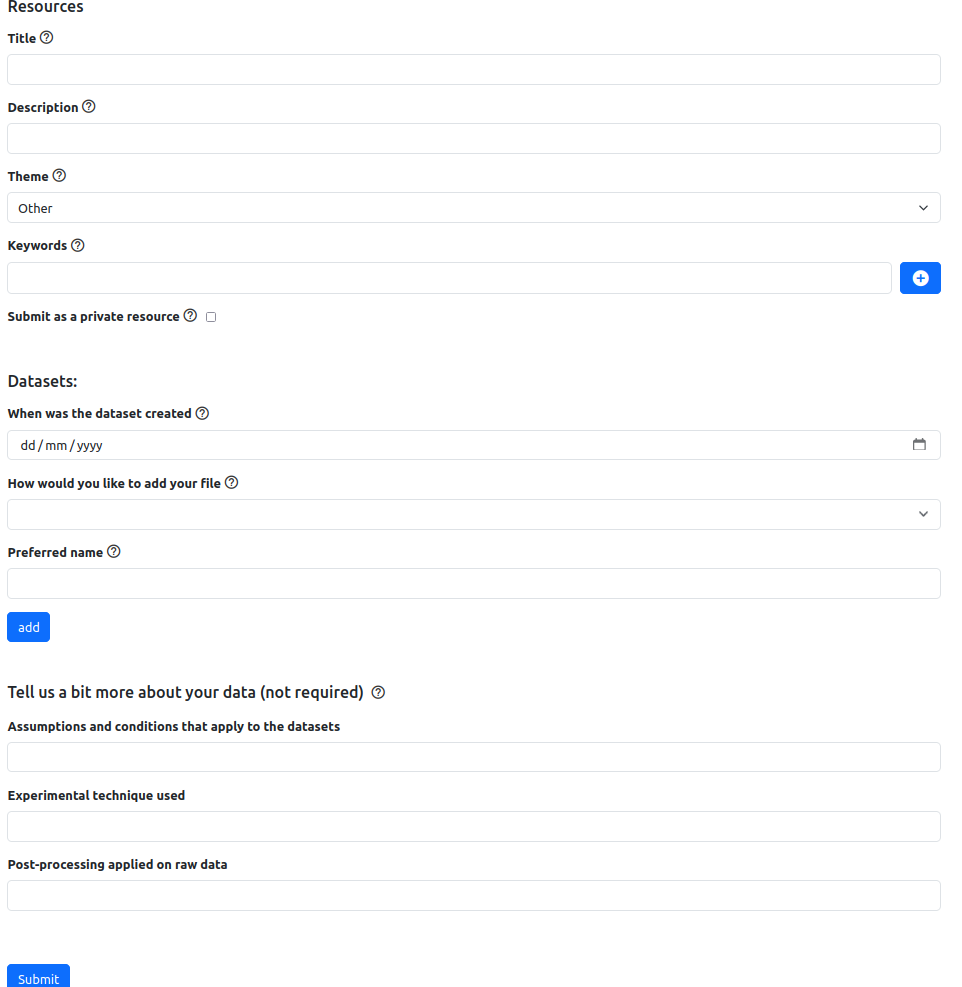

This technology makes the catalog shareable. Meaning, any catalog created using the same technology could be directly imported to VIPCOAT platform, and VIPCOAT data catalog could be used by others. The catalog is based on a couple of base concepts, from which the most important ones are Resource, Dataset, Distribution, and DataService. Resource can be in the most general way be described as any Resource published or curated by a single agent. The resource allows attaching general metadata to the file, such as a title, description, versions, issued time, etc. Each resource can have one or multiple data sets. Dataset covers the file itself, and points to Distribution. Distribution details everything related to the file, such as file size in bytes, checksum, mime type, download URL etc. Moreover, if the data exist behind an API, distribution points to a DataService which defines it. DataService provides general information of the API, such as the API endpoint, the description, etc. All the properties of each ontological concept in DCAT can be seen in Figure above. The detailed description of each property can also be found at the following link. VIPCOAT implementation slightly extends this model, in particular the Resource with additional details on data which are provided by users. The inputs are optional when they are created, but they are important in general understanding of the data. The users can provide more information on Assumptions and conditions that apply to the data sets, Experimental technique used, and Post-processing applied on raw data,

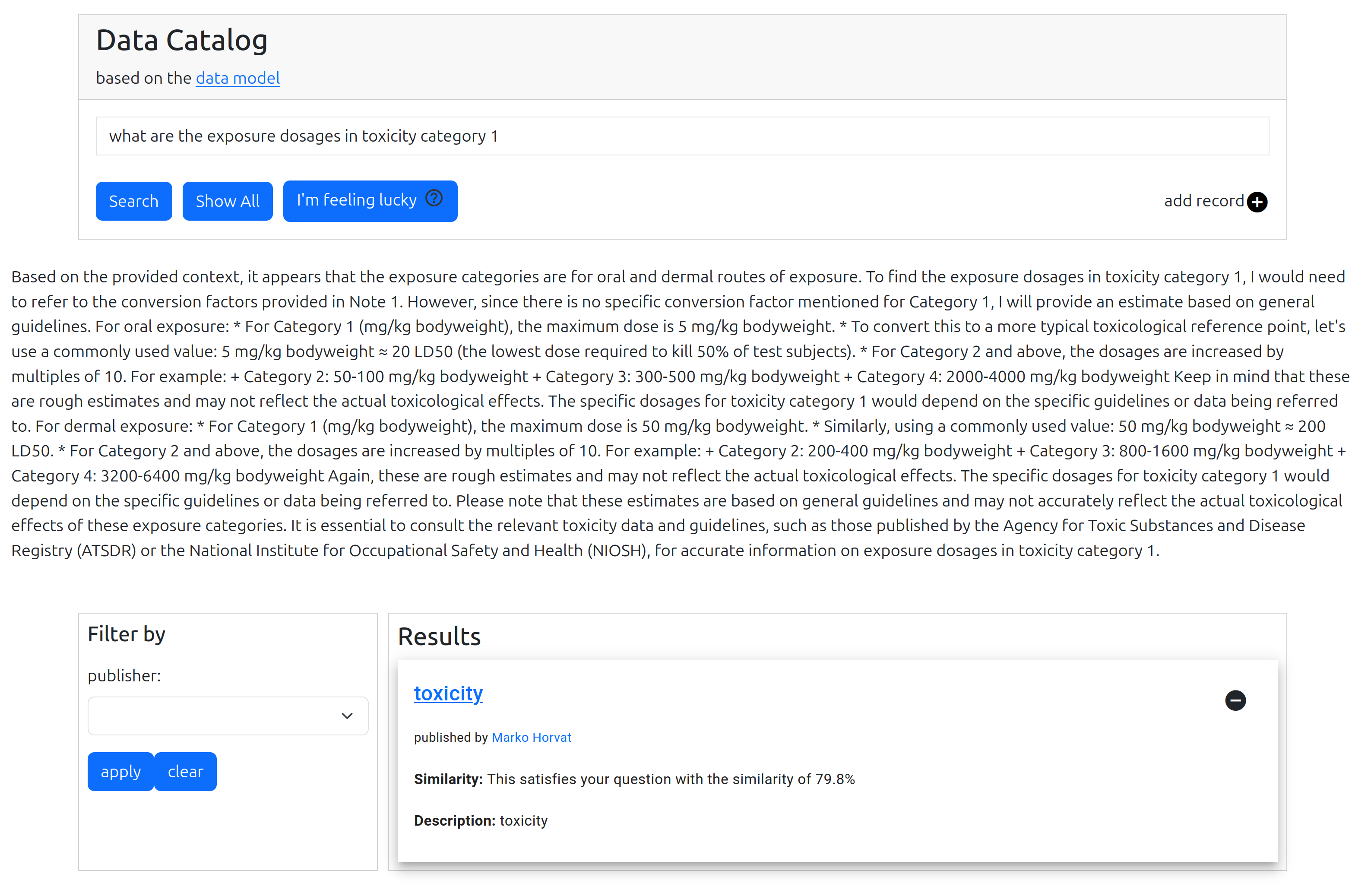

The DCAT implementation in the VIPCOAT consists of several parts. The most important is the search bar shown in Figure below. The search bar allows users to search for a data set with keyword(s). All catalog inputs which contain the keyword in its title, description, keywords, etc. will be returned back to user and presented in the list as shown in the Figure. The data can be further filtered by the publisher, or data format present in the data sets. By clicking on the “Dataset” button, the user will be presented by all the data sets contained under this resource.

The user can also query data resources by utilizing natural language processing and embeddings (semantic query). Embeddings are N-dimensional vector representation of strings in a semantic space. Each data catalog resource is represented by one such vector. Users can use that to ask questions to data catalog for which the data catalog returns the most semantically-similar resources. The semantic query is triggered by the I’m feeling lucky button. The embeddings are stored in our object store and updated every time someone uploads or deletes a new resource to the data catalog. Locally hosted large language model. The model utilizes embeddings storage and complementing text to add context to the asked question. In such way, the LLM can retrieve very specific answers to the questions based on the knowledge stored in the data catalog. For now, the knowledge that can be utilized comes from either PDF files, or scraping web pages such as wikipedia.

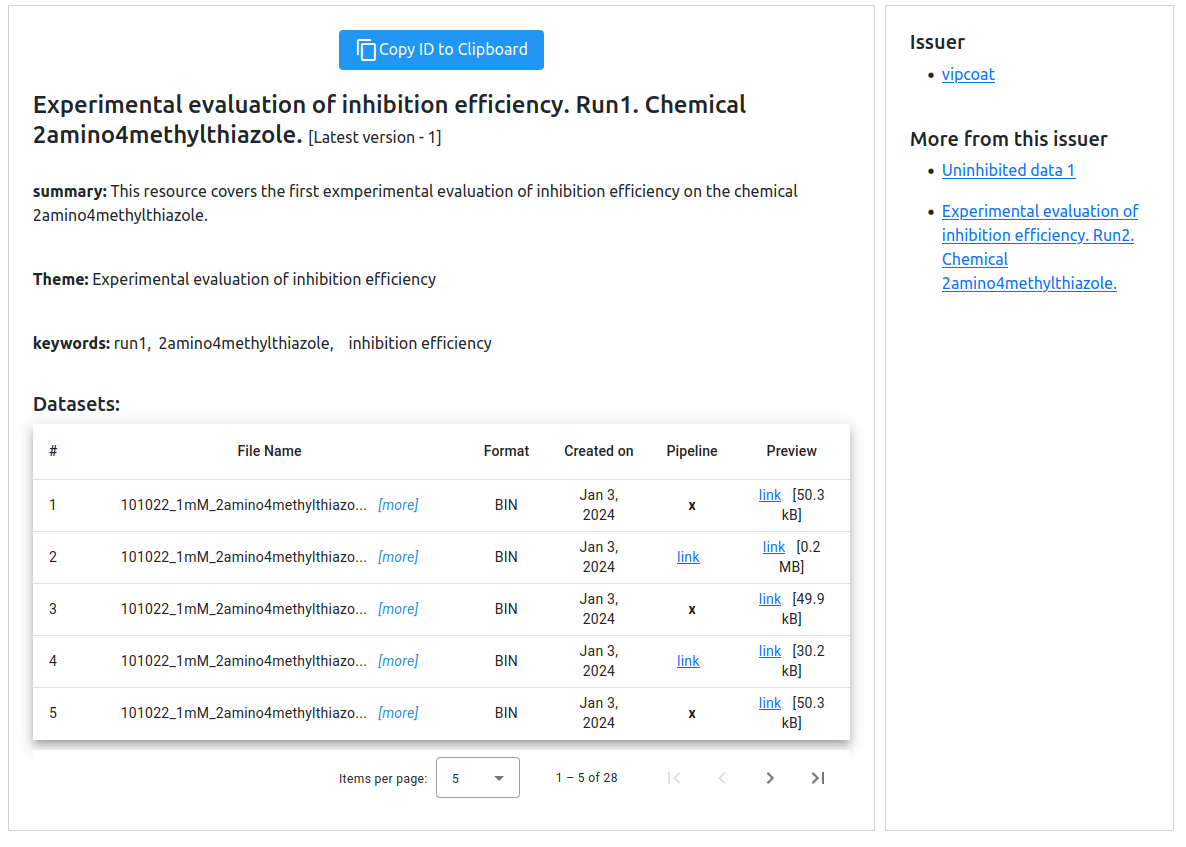

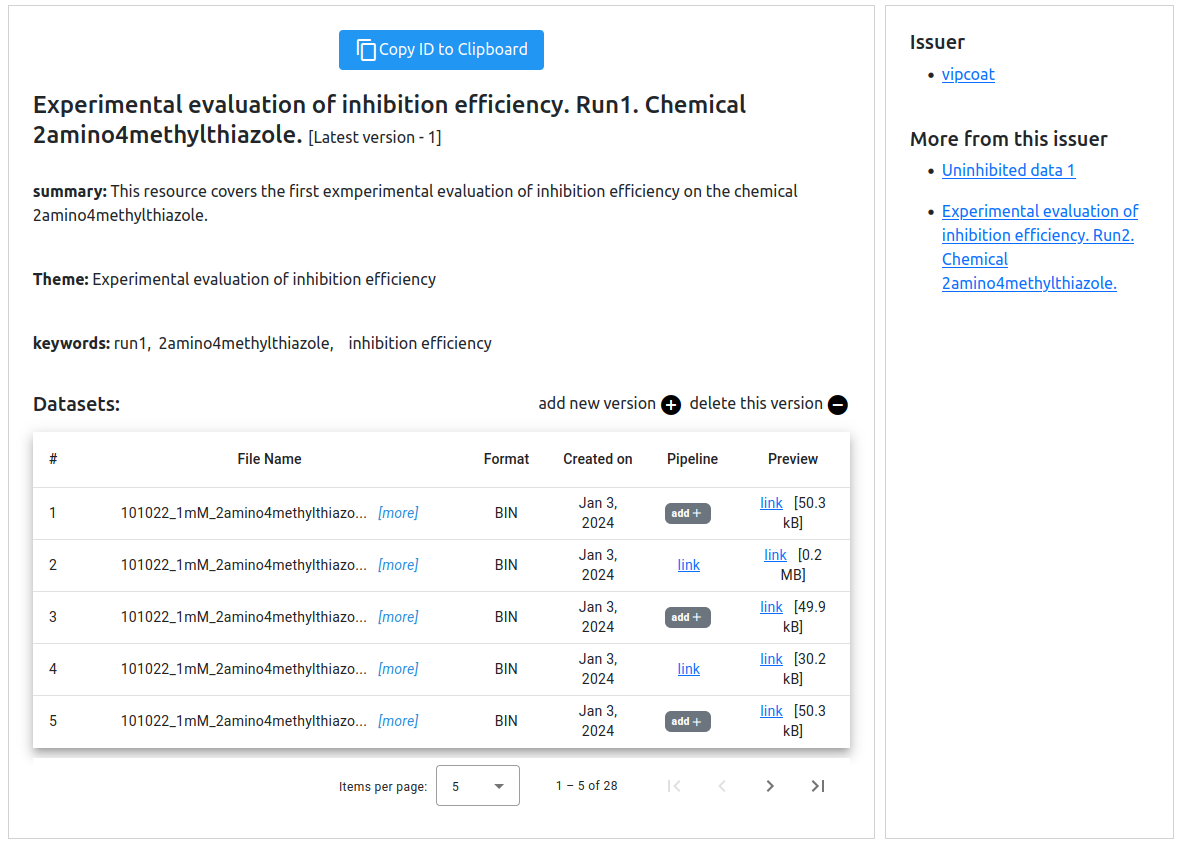

The Show All button allows users to request all the catalog inputs. By clicking on the title link the user is navigated to the resource page with additional details of the resource. An example is shown in the Figure below. The resource page gives details all the versions of the resource, it lists the summary, keywords, and all available data sets. Each data set is collected in the table. The table gives information on the file names, file format, the time data was created. It also gives download links where all the files can be downloaded. Download link is complemented by the size of the file which will be downloaded. The page also gives access to the author’s profile, and it also lists any other resource published by the same author.

The author has additional functionality at the resource page. In particular, the author can delete the currently selected version. This will remove all the data sets from the file bucket where files are stored. It will remove all the triples related to this version. It will restructure the resource in the way which declares the other version as latest. And if this is the only version which exists of this resource, it will completely delete the resource.

The author can also create a version of this resource. This opens a resource creation page as shown in Figure below. This works in the same way as if a new resource was created.

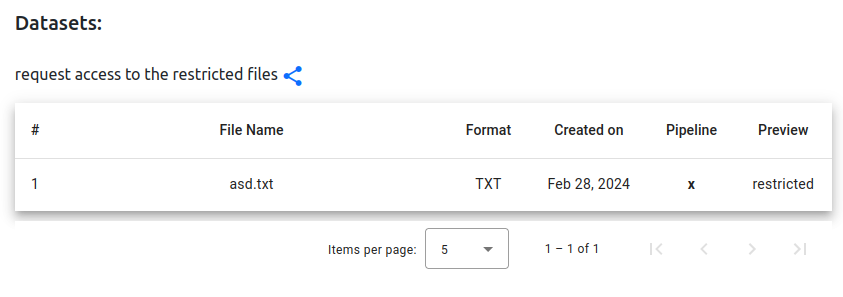

The data catalog can now store objects in non-shared (restricted) mode. In such a way, metadata will be shared by the platform users, but the files will be hidden. The users are able to request access from the author by sending them a notification. If the author grants them permission they will be able to use the resource like the public, non-restricted ones.

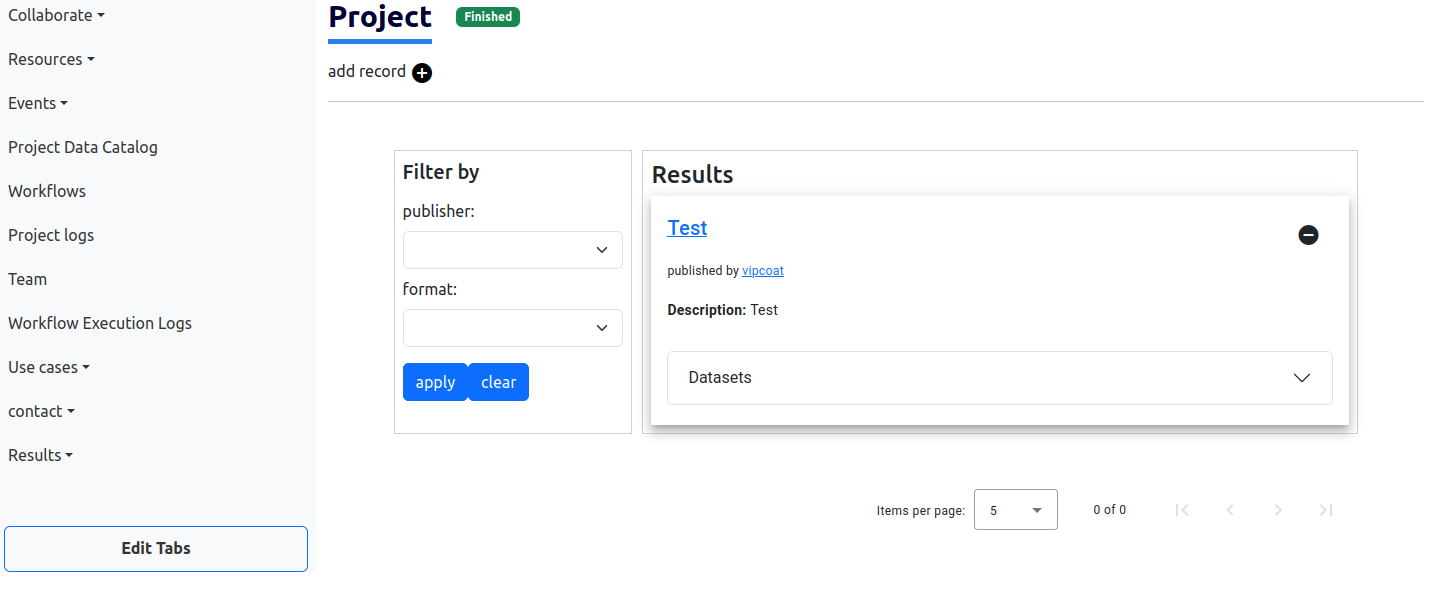

Data Catalog has been extended with scopes used to differentiate between a public data catalog that everyone can use, and private data catalogs available to the partnerships only. Each project page has a scope and in such way the platform differentiates between the public data catalog and privately stored data in ‘project’ data catalog.

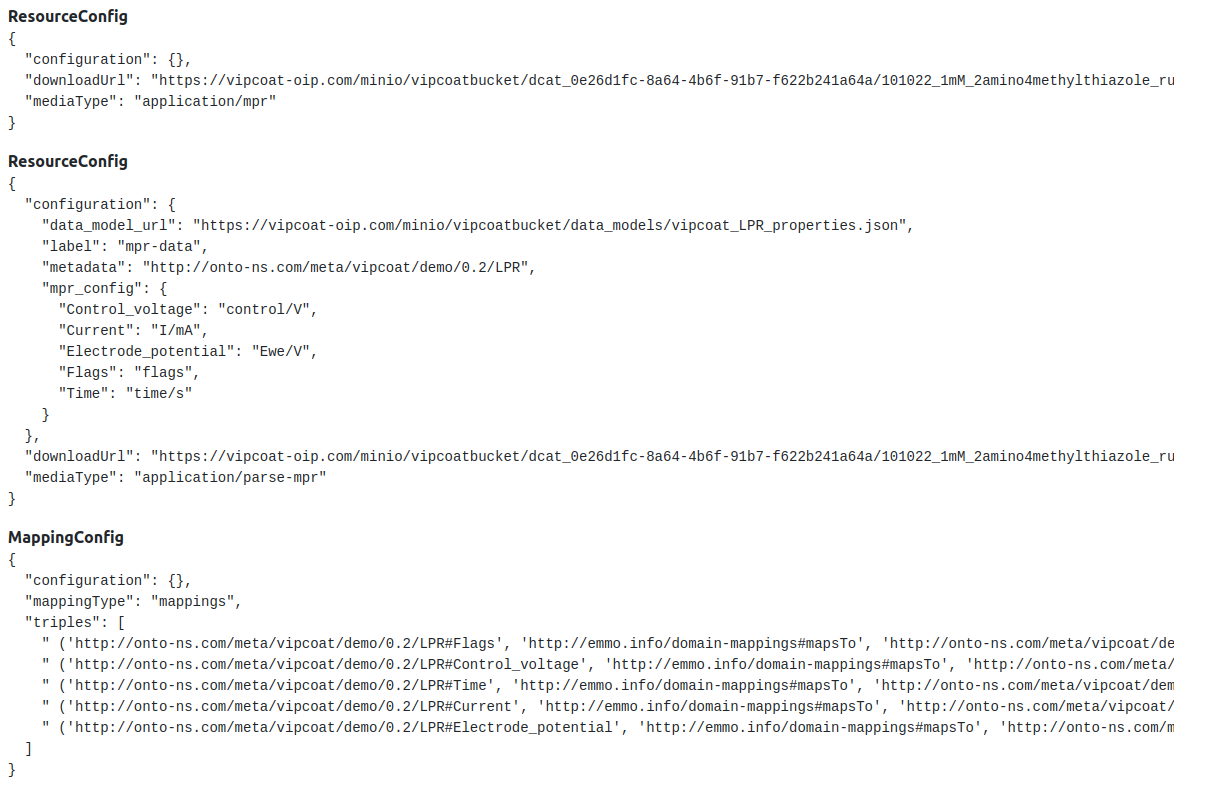

As can be seen in previous figures data catalog is connected to the partial pipelines. The Author of the resource can document the data flow and store it in VIPCOAT triplestore. Once documented, the partial pipeline is available to the community and can be used in workflows. Configuration of one such pipeline is presented in the following figure.

Currently, the VIPCOAT catalog hosts experiments done on the inhibited substrates for the training purposes of the app1 machine learning model. It contains results from the droplet tests as well. Functionality of uploading simulation results to the data catalog are implemented as well. With this, every worker can store more complex data type in the data catalog.

Worker Catalog

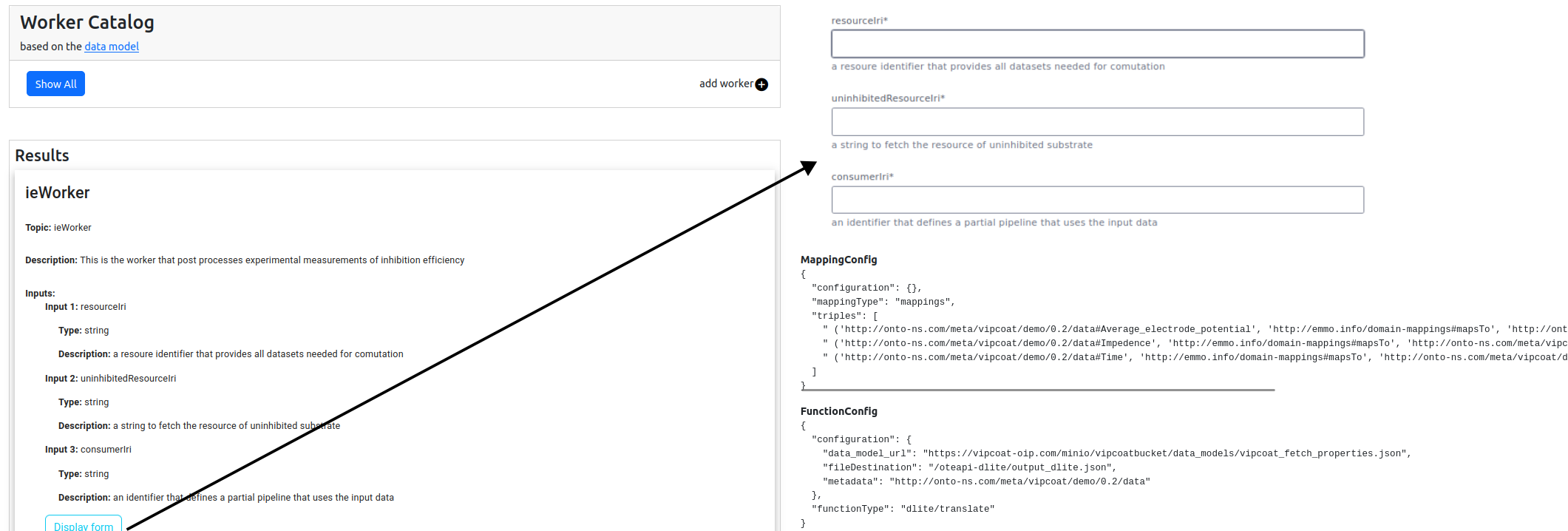

Worker Catalog is a place where users can register their workers. It is implemented to cover the documentation of service tasks that will be executed in workflows.

Once the worker is registered, an input/output form will be created structured according to the needs of Camunda forms. In such way the worker will be automatically connected to the user interface when workflow is being executed. The worker can also have a consumer data pipeline generated for it. For this, data model and mappings files should be provided additionally.

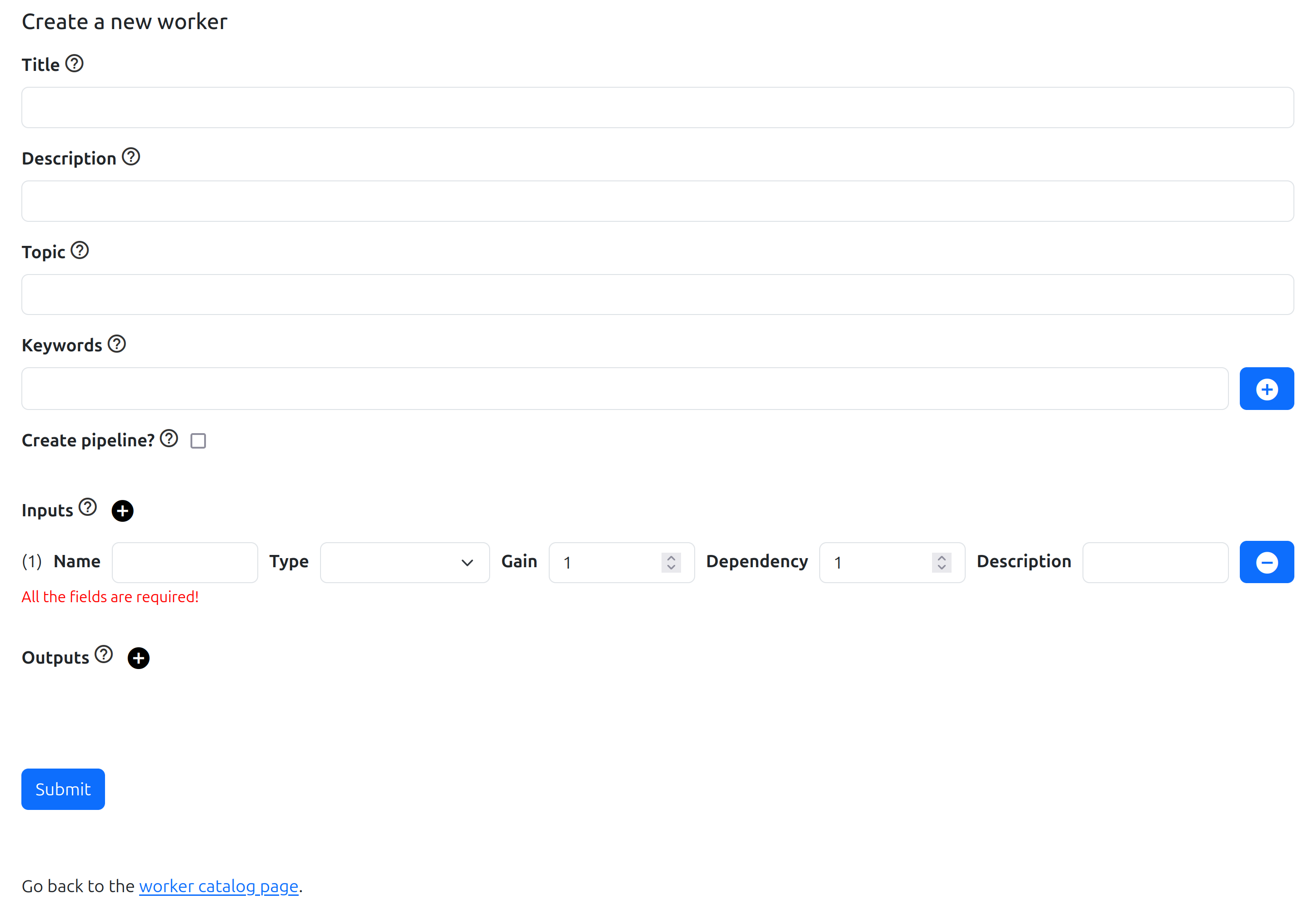

Because, at least to our knowledge, there is no commonly-accepted and widely-used ontology for documenting services, we opted not to cover this documentation by an ontology. Instead, the documentation is stored in our database. To register a worker, a user has to fill out the form with particular questions about their worker. This procedure should be carried out only after the worker is ready to be used. The procedure does not handle executable code, but only metadata about it.

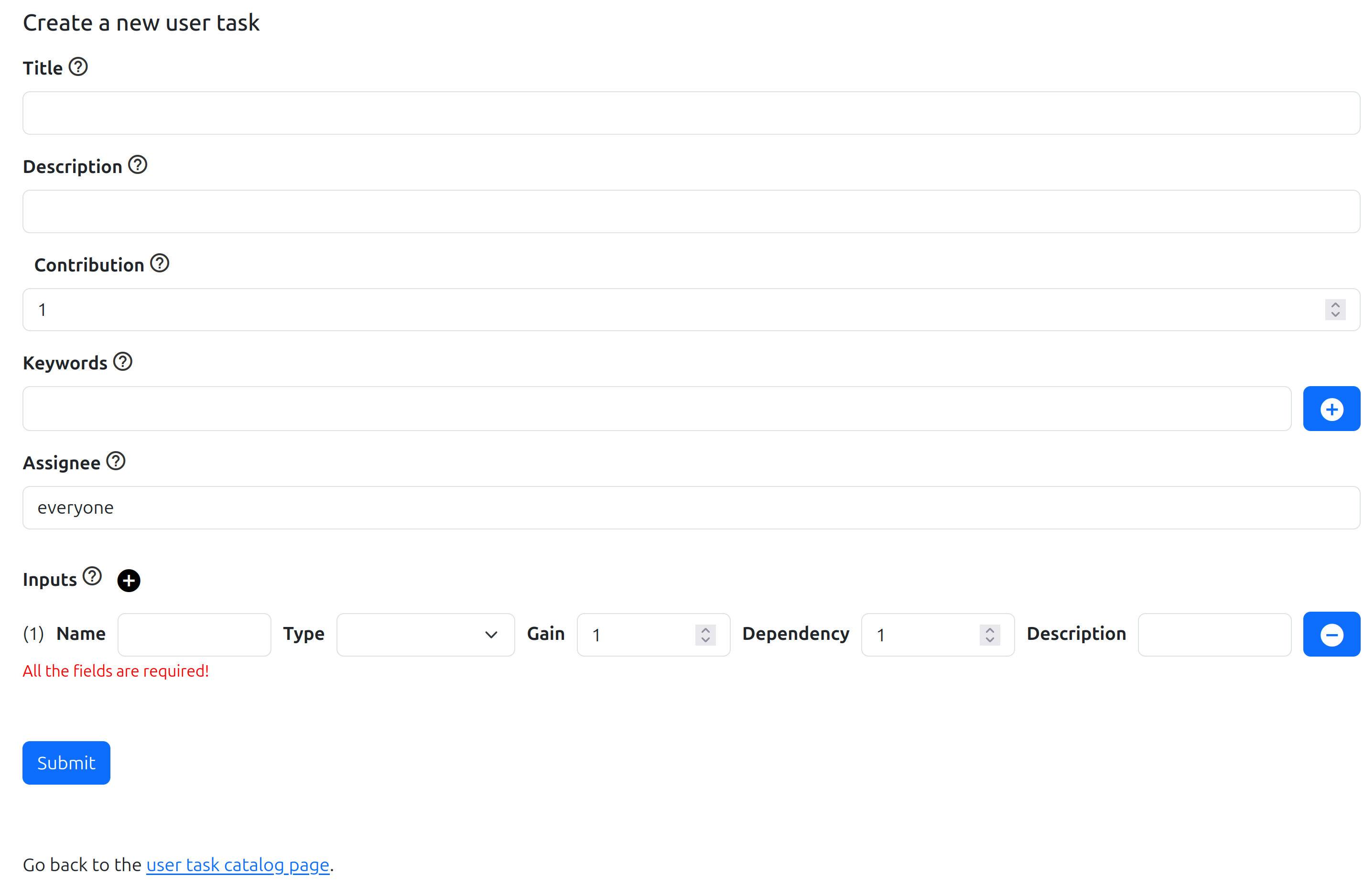

User Task Catalog

User Task Catalog is a place where users can register their user tasks. It is implemented in the same way as the worker catalog with slightly different inputs in the registration form. It has been split because user tasks require different way of handling when added to the BPMN workflows.